Time series analysis is a cornerstone of financial modeling, helping us understand trends, forecast future values, and make informed decisions. However, not all time series are created equal. Some exhibit properties that make them easier to analyze, while others require more sophisticated techniques. One such challenging category is non-stationary time series. In this article, I will explore the theory behind non-stationary time series, its implications in finance, and how we can effectively model and analyze such data.

Table of Contents

What is a Non-Stationary Time Series?

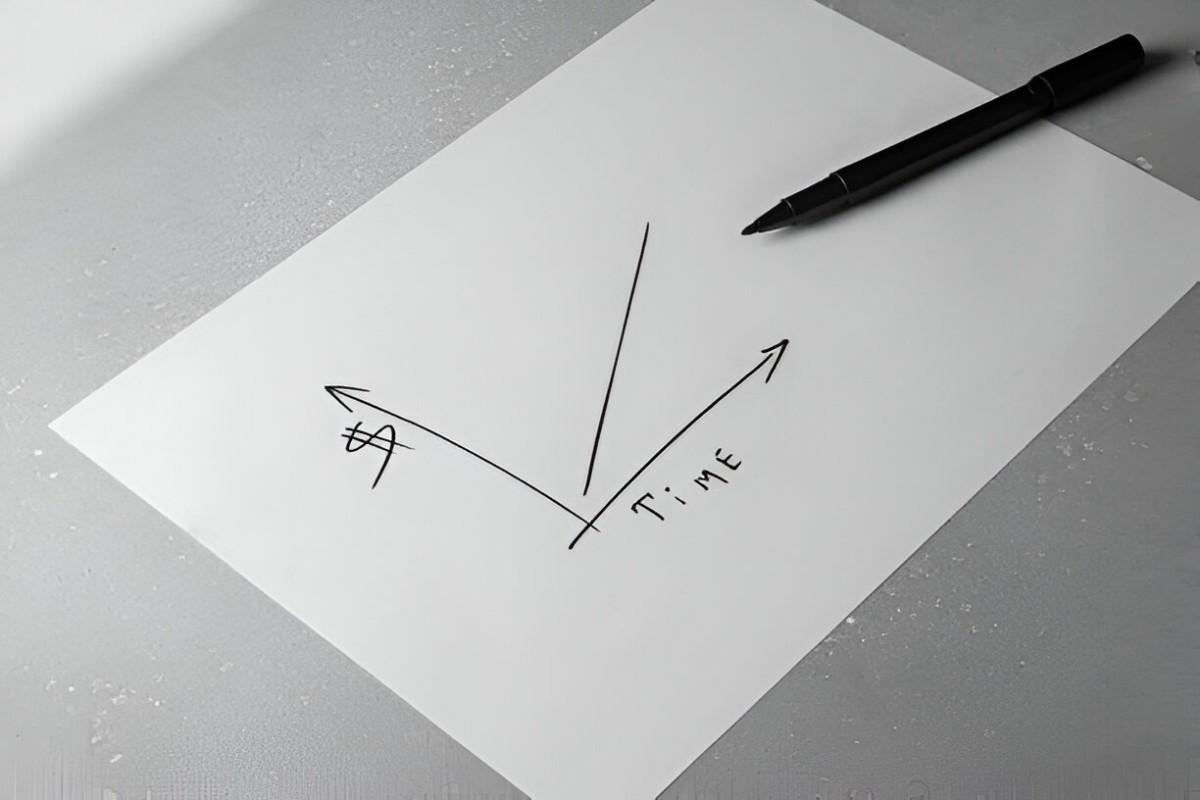

A time series is a sequence of data points collected or recorded at specific time intervals. In finance, this could be stock prices, interest rates, or GDP growth. A time series is said to be stationary if its statistical properties—such as mean, variance, and autocorrelation—do not change over time. Conversely, a non-stationary time series has statistical properties that evolve over time, making it more complex to analyze.

Why Does Non-Stationarity Matter?

Non-stationarity is a critical concept in finance because many financial time series exhibit trends, seasonality, or other forms of time-dependent behavior. For example, stock prices often trend upward or downward over time, and GDP growth rates fluctuate with economic cycles. Ignoring non-stationarity can lead to misleading conclusions, such as spurious correlations or inaccurate forecasts.

Types of Non-Stationarity

Non-stationary time series can be broadly categorized into two types:

- Trend Stationary: The series fluctuates around a deterministic trend. Removing the trend makes the series stationary.

- Difference Stationary: The series contains a stochastic trend, and differencing the series makes it stationary.

Let’s explore these concepts in detail.

Trend Stationary Series

A trend stationary series can be expressed as:

y_t = \alpha + \beta t + \epsilon_tHere, y_t is the observed value at time t, \alpha is the intercept, \beta t represents the deterministic trend, and \epsilon_t is a stationary error term.

For example, consider a company’s quarterly revenue that grows linearly over time. The deterministic trend can be removed by regressing the series on time and analyzing the residuals.

Difference Stationary Series

A difference stationary series, also known as a unit root process, can be modeled as:

y_t = y_{t-1} + \epsilon_tHere, the current value depends on the previous value plus a random shock. Such series require differencing to achieve stationarity. For example, if we difference the series once, we get:

\Delta y_t = y_t - y_{t-1} = \epsilon_tThis differenced series is now stationary.

Testing for Non-Stationarity

Before modeling a time series, it’s essential to determine whether it is stationary or not. Several statistical tests can help us identify non-stationarity.

Augmented Dickey-Fuller (ADF) Test

The ADF test is one of the most widely used tests for stationarity. It tests the null hypothesis that a unit root is present in the series. The test equation is:

\Delta y_t = \alpha + \beta t + \gamma y_{t-1} + \sum_{i=1}^{p} \delta_i \Delta y_{t-i} + \epsilon_tHere, \gamma is the coefficient of interest. If \gamma = 0, the series has a unit root and is non-stationary.

KPSS Test

The KPSS test takes the opposite approach. It tests the null hypothesis that the series is stationary around a deterministic trend. If the test statistic exceeds a critical value, we reject the null hypothesis and conclude that the series is non-stationary.

Modeling Non-Stationary Time Series

Once we identify a series as non-stationary, we need to apply appropriate techniques to model it effectively. Below are some common approaches.

Differencing

Differencing is a straightforward method to remove stochastic trends. For example, if we have a series y_t, the first difference is:

\Delta y_t = y_t - y_{t-1}If the differenced series is stationary, we can model it using techniques like ARIMA.

Detrending

For trend stationary series, we can remove the deterministic trend by fitting a regression model and analyzing the residuals. For example:

y_t = \alpha + \beta t + \epsilon_tAfter estimating \alpha and \beta, the residuals \epsilon_t should be stationary.

ARIMA Models

ARIMA (AutoRegressive Integrated Moving Average) models are specifically designed for non-stationary series. The “I” in ARIMA stands for integration, which refers to differencing the series to achieve stationarity. An ARIMA(p, d, q) model has three components:

- AR(p): Autoregressive component of order p.

- I(d): Differencing component of order d.

- MA(q): Moving average component of order q.

For example, an ARIMA(1, 1, 1) model can be written as:

(1 - \phi_1 L)(1 - L)y_t = (1 + \theta_1 L)\epsilon_tHere, L is the lag operator, and \phi_1 and \theta_1 are parameters to be estimated.

Cointegration

In some cases, multiple non-stationary series may have a long-term equilibrium relationship. For example, stock prices and dividends may move together over time. Cointegration analysis helps us identify and model such relationships.

The Engle-Granger two-step method is a common approach to test for cointegration. First, we estimate the long-run relationship:

y_t = \alpha + \beta x_t + \epsilon_tThen, we test the residuals \epsilon_t for stationarity using the ADF test. If the residuals are stationary, the series are cointegrated.

Practical Example: Modeling US GDP Growth

Let’s apply these concepts to a real-world example: modeling US GDP growth. GDP data is often non-stationary due to its upward trend over time.

Step 1: Visual Inspection

First, I plot the US GDP time series to visually inspect for trends and seasonality. The plot shows a clear upward trend, suggesting non-stationarity.

Step 2: ADF Test

Next, I perform an ADF test to confirm non-stationarity. The test yields a p-value greater than 0.05, so I fail to reject the null hypothesis of a unit root.

Step 3: Differencing

I difference the series once and re-run the ADF test. This time, the p-value is less than 0.05, indicating that the differenced series is stationary.

Step 4: ARIMA Modeling

I fit an ARIMA(1, 1, 1) model to the differenced series. The model parameters are estimated using maximum likelihood, and diagnostic checks confirm that the residuals are white noise.

Step 5: Forecasting

Finally, I use the fitted ARIMA model to forecast future GDP growth. The forecasts show a gradual increase, consistent with historical trends.

Challenges and Considerations

While non-stationary time series modeling is powerful, it comes with challenges. Over-differencing can lead to loss of information, and misspecification of the ARIMA model can result in poor forecasts. Additionally, structural breaks—sudden changes in the series due to events like financial crises—can complicate analysis.

To address these challenges, I recommend:

- Robust Testing: Use multiple tests (e.g., ADF and KPSS) to confirm stationarity.

- Model Diagnostics: Check residuals for white noise and ensure model assumptions are met.

- Structural Break Analysis: Test for and account for structural breaks using techniques like the Chow test.

Conclusion

Non-stationary time series are ubiquitous in finance, and understanding how to model them is essential for accurate analysis and forecasting. By applying techniques like differencing, detrending, and ARIMA modeling, we can transform non-stationary series into stationary ones and extract meaningful insights. However, careful testing and diagnostics are crucial to avoid pitfalls and ensure robust results.